Explore and extend models from the latest cutting edge research.

Discover and publish models to a pre-trained model repository designed for research exploration. Check out the models for Researchers, or learn How It Works. Contribute Models.

*This is a beta release – we will be collecting feedback and improving the PyTorch Hub over the coming months.

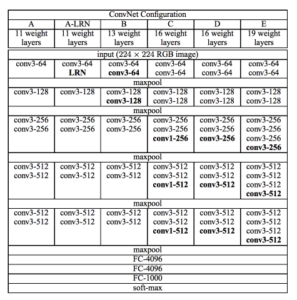

AlexNet

The 2012 ImageNet winner achieved a top-5 error of 15.3%, more than 10.8 percentage points lower than that of the runner up.

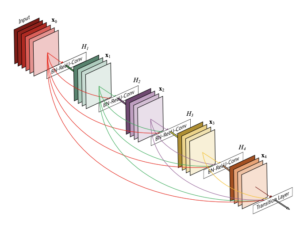

Densenet

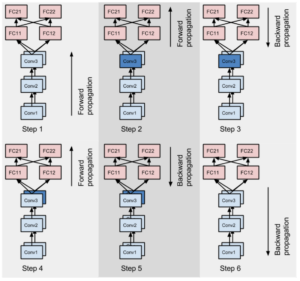

Dense Convolutional Network (DenseNet), connects each layer to every other layer in a feed-forward fashion.

Silero Speech-To-Text Models

A set of compact enterprise-grade pre-trained STT Models for multiple languages.

Silero Text-To-Speech Models

A set of compact enterprise-grade pre-trained TTS Models for multiple languages

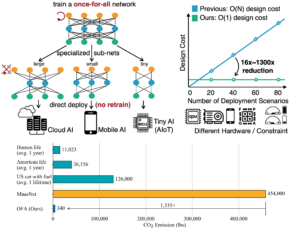

Once-for-All

Once-for-all (OFA) decouples training and search, and achieves efficient inference across various edge devices and resource constraints.